About

I work on 3D computer vision, building AI systems capable of interacting with real-world 3D environments using visual inputs, much like how humans do. This includes inferring shapes, materials, cameras, lighting, motion, and functional properties from limited 2D observations.

I am currently a PhD student at HKU, advised by Shenghua Gao and working closely with Yi Ma. From 2020 to 2024, I was a PhD student at ShanghaiTech University. From Winter 2022 to Spring 2023, I interned at MSRA, working with Xin Tong and Jiaolong Yang. I did my undergraduate at SCUT in 2020.

Research

See Google Scholar for a complete list of publications.

* indicates equal contribution.

|

CUPID: Generative 3D Reconstruction via Joint Object and Pose Modeling

Preprint, 2025

Create canonically posed 3D object and an object-centric camera from single image in just a few seconds. |

|

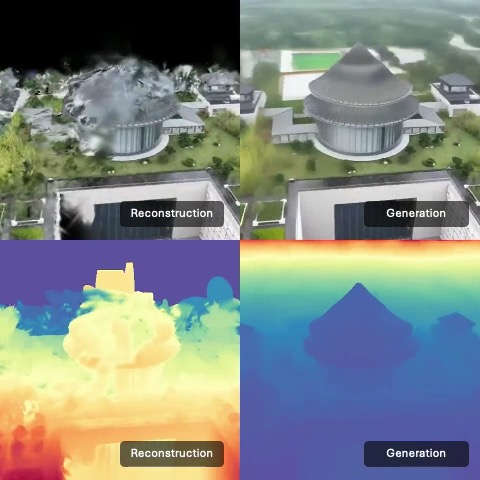

GenFusion: Closing the Loop between Reconstruction and Generation via Videos

CVPR, 2025

Generative scene inpainting with a reconstruction-driven video generation model. |

|

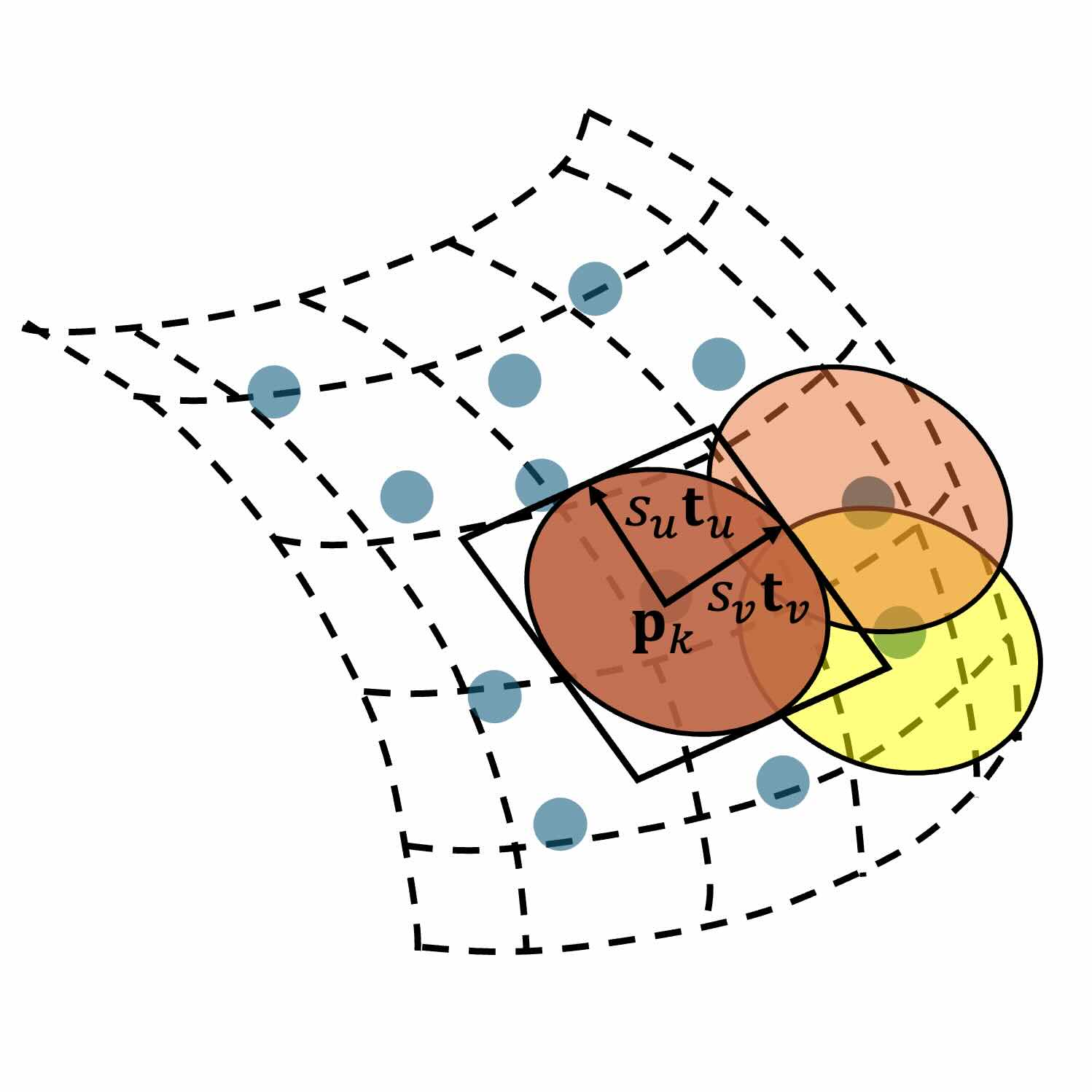

2D Gaussian Splatting for Geometrically Accurate Radiance Fields

SIGGRAPH, 2024 (Most Influential Paper#1)

Using surfels and a ray-cast based differentiable rasterizer enables efficient and high-fidelity geometry and apperance reconstruction from real images. |

|

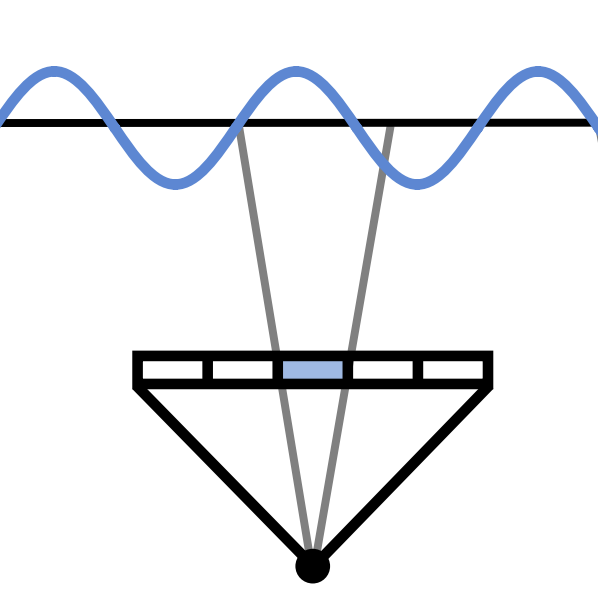

Mip-Splatting: Alias-free 3D Gaussian Splatting

CVPR, 2024 (Oral, Best Student Paper)

Mip-filters enables synthesizing alias-free scenes with 3D Gaussian Splatting. |

|

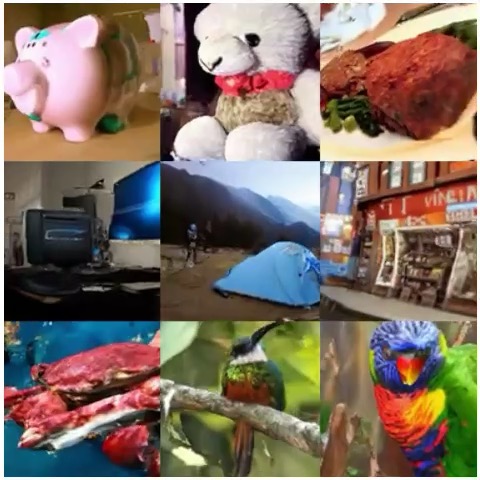

3D-aware Image Generation using 2D Diffusion Models

ICCV, 2023

Create 3D consistent scene from a single image via iterative multiview RGBD sampling and fusion. |

Service

Journal reviewer: TPAMI, TVCG ...

Conference reviewer: SIGGRAPH, SIGGRAPH Asia, CVPR, ICCV, ECCV, ICLR, NeurIPS ...